Table Of Contents

Category

Artificial Intelligence

ARVR

1. Introduction

The concept of the metaverse has captured the imagination of technologists, gamers, and business leaders alike. As a collective virtual shared space, created by the convergence of virtually enhanced physical and digital reality, the metaverse is poised to become the next significant frontier in internet and technology development. It promises an immersive environment where people can meet, work, and play using avatars, transcending the limitations of the physical world.

The development of the metaverse involves the integration of various technologies including virtual reality (VR), augmented reality (AR), artificial intelligence (AI), and blockchain, among others. This integration aims to create a seamless and interactive experience that is as engaging as the real world. As companies like Facebook (now Meta), Google, and Microsoft invest heavily in this space, the potential for new forms of interaction and digital economy becomes increasingly evident.

1.1 Overview of Metaverse Development

Metaverse development refers to the process of creating and maintaining a virtual world that users can access via the internet. This development encompasses a range of disciplines including 3D modeling, coding, AI, and network management. The goal is to create a fully immersive and interactive environment where users can engage in a variety of activities such as social interactions, gaming, and virtual commerce.

The architecture of the metaverse is designed to be highly scalable and capable of supporting a vast number of users simultaneously. This requires robust backend infrastructure and advanced computing capabilities. Developers also focus on creating user-friendly interfaces and realistic experiences, which are crucial for user engagement and retention. For more detailed insights into metaverse development, TechCrunch offers a range of articles and resources that can provide deeper understanding.

Here is an architectural diagram illustrating the integration of technologies in metaverse development:

1.2 Importance of 3D Models in the Metaverse

3D models are at the heart of the visual and interactive experience in the metaverse. They represent objects, avatars, and environments in a three-dimensional space, making the virtual world visually rich and engaging. The quality and realism of these models play a crucial role in how immersive the metaverse feels to its users. Detailed and lifelike models can significantly enhance the user experience, making virtual interactions more compelling and realistic.

In addition to enhancing visual appeal, 3D models are also crucial for the functionality of the metaverse. They are used in everything from the design of virtual spaces and items to the creation of user avatars and interactive tools. As such, the development of 3D models requires a high level of skill in 3D graphics and animation. For those interested in the technical aspects of 3D modeling in the metaverse, resources such as Autodesk provide valuable information and tools that are widely used in the industry.

2. What are 3D Models in Metaverse Development?

3D models are essential components in the development of the Metaverse, serving as the building blocks for creating immersive and interactive virtual environments. These models are digital representations of objects or systems that simulate their real-world counterparts in three dimensions, encompassing height, width, and depth. They are crafted using specialized software and can be as simple as a digital representation of a piece of furniture or as complex as an entire cityscape.

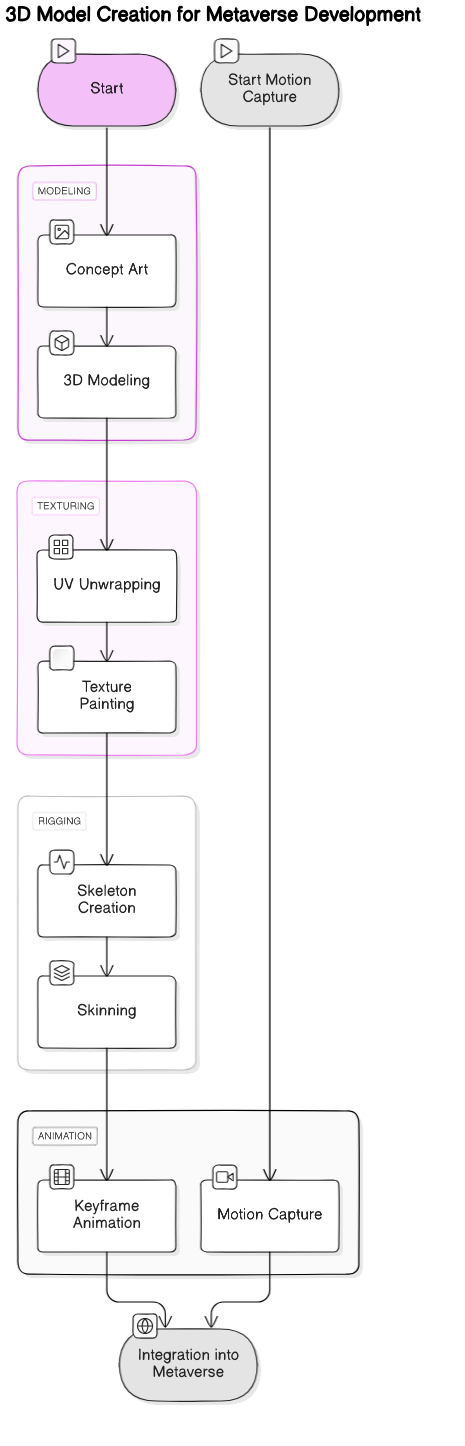

The creation of 3D models is a meticulous process that involves modeling, texturing, rigging, and animation. Modeling is the first step where the basic shape of the object is created using polygons in 3D software. Texturing follows, which involves wrapping the model in 2D images to give it color and texture. Rigging is the process of adding bones to the model so it can move, which is crucial for animating characters. Finally, animation brings the model to life by defining its movements. These models are then imported into the Metaverse platforms where they are used to build the virtual worlds.

2.1 Definition and Core Components

A 3D model in the context of Metaverse development refers to the digital simulation of any physical entity. Core components of a 3D model include vertices (points in 3D space), edges (lines connecting the vertices), and faces (2D shapes that form the model’s surface). These elements are used to construct the geometry of the model. Additionally, 3D models often include textures, which are bitmap images that give the model color and detail, and materials, which define the surface properties such as shininess and transparency.

The complexity of a 3D model can vary significantly based on its intended use within the Metaverse. For instance, models intended for background scenery might be less detailed compared to those designed for close-up interactions. Tools like Blender, Maya, and 3ds Max are commonly used for creating detailed 3D models suitable for Metaverse applications.

2.2 Role of 3D Models in Immersive Environments

In the Metaverse, 3D models play a pivotal role in creating immersive environments that engage users. They are not only used to represent physical objects but also to create the setting and atmosphere of virtual spaces. These models help in constructing a sense of realism and spatial awareness, which are crucial for user interaction and engagement in the virtual world. For example, in a virtual reality (VR) game, players interact with 3D models as part of the gameplay, which enhances the immersive experience.

Moreover, 3D models are integral in providing interactive experiences. They can be programmed to respond to user actions, such as opening doors, moving objects, or triggering animations, which makes the environment dynamic and engaging. The realism offered by high-quality 3D models can significantly affect user presence, a psychological state where virtual experiences feel real. This aspect of 3D modeling is crucial for applications such as virtual training simulations, educational programs, and interactive storytelling.

By understanding the role and construction of 3D models, developers can better harness their potential to create compelling and interactive Metaverse experiences.

3. How to Create High-Performance 3D Models

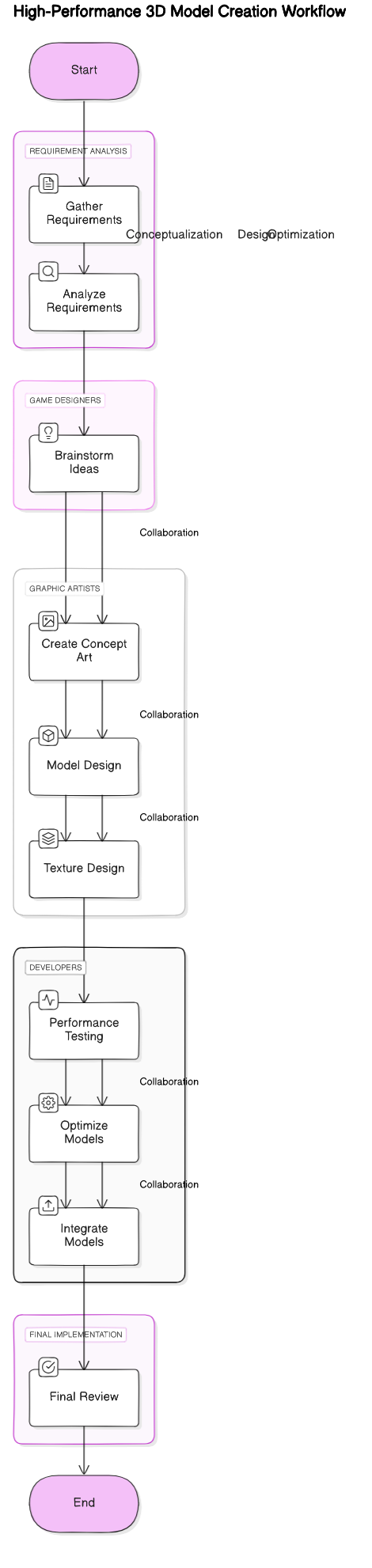

Creating high-performance 3D models is crucial for various applications, including video games, simulations, and virtual reality. The process involves several detailed steps that ensure the final model is optimized for performance while still maintaining high visual quality.

3.1 Planning and Conceptualization

The first step in creating a high-performance 3D model is planning and conceptualization. This phase is about laying the groundwork for what the model will become, including its purpose, functionality, and aesthetic appeal.

3.1.1 Understanding the Requirements

Understanding the requirements is critical in the planning and conceptualization stage of 3D modeling. This involves gathering and analyzing all necessary information that will influence the design and functionality of the model. It's important to consider the end use of the model—whether it will be used in a game, an educational simulation, or as part of a virtual reality experience. Each of these applications may have different requirements in terms of complexity, realism, and performance.

For instance, a model intended for a high-end video game needs to be detailed and realistic but also optimized to run smoothly on gaming systems. This means understanding the limitations and capabilities of the hardware it will run on, which directly influences the level of detail and the techniques used in the modeling process. Autodesk provides a comprehensive guide on understanding these requirements and starting the 3D modeling process (Autodesk).

Additionally, it's essential to communicate with other team members, including game designers, developers, and graphic artists, to ensure that the model meets all specified needs. This collaborative approach helps in refining the model requirements and can significantly influence the planning phase.

By thoroughly understanding the requirements, 3D modelers can create a clear and effective plan that serves as a blueprint for the entire modeling process. This step is foundational and sets the stage for the subsequent phases of model creation, ensuring that the final product not only looks good but also performs well in its intended environment. For further reading on the initial stages of 3D modeling, Bloop Animation offers an excellent starting point (Bloop Animation).

3.1.2 Sketching and Storyboarding

Sketching and storyboarding are essential processes in the development of visual media, from films to video games and animations. Sketching is the art of creating preliminary drawings or paintings, often used to explore ideas and compositions quickly. These sketches serve as a visual brainstorming tool that helps artists and designers communicate concepts and refine their ideas.

Storyboarding, on the other hand, takes the sketching process a step further by organizing these sketches into a sequence. This technique is crucial in planning the narrative flow of a project. Storyboards visually predict how the story unfolds, scene by scene, providing a linear progression of events. This method is particularly useful in film and animation, where visual coherence and timing are critical.

For more detailed insights into storyboarding, you might want to visit websites like Bloop Animation (https://www.bloopanimation.com/storyboarding/), which offers tutorials and tips on creating effective storyboards. Another good resource is the Toptal Design Blog (https://www.toptal.com/designers/storyboards), where you can find a guide on the technical aspects of storyboarding and its importance in project planning.

3.2 Modeling Techniques

In the realm of 3D graphics, modeling is a fundamental technique used to create a three-dimensional representation of any surface or object. There are several techniques used in 3D modeling, each suitable for different types of projects. Some of the most common methods include polygon modeling, NURBS (Non-Uniform Rational B-Splines) modeling, and sculpting. Each technique has its own set of tools and approaches that cater to various design needs.

Polygon modeling, for instance, uses vertices, edges, and faces to create a mesh. NURBS modeling is used for creating smooth surfaces with fewer control points, making it ideal for automotive and product design. Sculpting, popularized by software like ZBrush, allows for intuitive mesh manipulation, mimicking traditional clay sculpting.

A comprehensive guide to these techniques can be found on Creative Bloq (https://www.creativebloq.com/3d-tips/modeling-tips-1232739), which provides tips and tricks for better 3D modeling. Additionally, Autodesk has extensive tutorials and articles that help beginners and professionals alike master various modeling techniques (https://www.autodesk.com/solutions/3d-modeling-software).

3.2.1 Polygon Modeling

Polygon modeling is one of the most widely used techniques in 3D modeling, especially in the video game and film industry. This method involves creating a 3D object using polygons, typically triangles or quadrilaterals, which are connected by vertices and edges to form a mesh. The simplicity of polygon modeling makes it highly efficient for both creating and rendering models in real-time applications.

The technique allows for considerable control over the mesh structure, which is crucial for optimizing the model for performance, particularly in video games where real-time rendering is essential. Polygon models are also easier to unwrap and texture compared to other types of models, making them highly popular among texture artists.

For those interested in learning more about polygon modeling, 3DTotal (https://www.3dtotal.com) offers tutorials and project walkthroughs that cover various aspects of this technique. Another valuable resource is CG Spectrum (https://www.cgspectrum.com), which provides detailed courses and professional guidance on polygon modeling, helping you to enhance your skills in this area.

3.2.2 NURBS and Subdivision Surfaces

NURBS, or Non-Uniform Rational B-Splines, are a mathematical representation of 3D geometry. They allow the representation of complex shapes and surfaces in a compact and efficient manner. NURBS are widely used in computer graphics, animation, and CAD (Computer-Aided Design) because they offer great flexibility and precision for modeling forms ranging from simple to complex organic shapes. One of the key features of NURBS is their ability to accurately represent both standard geometric shapes (like cones and spheres) and free-form surfaces. For more detailed information on NURBS, Autodesk provides a comprehensive overview (https://www.autodesk.com).

Subdivision surfaces, on the other hand, are a technique used in computer graphics to create smooth surfaces through the iterative subdivision of polygonal meshes. This method is particularly popular in film and animation industries, as it allows for highly detailed and smooth surfaces that can be easily manipulated. Subdivision surfaces work by approximating a smooth surface over a mesh of polygons by subdividing each polygon into smaller parts, refining and smoothing the surface iteratively. Pixar's OpenSubdiv library offers an industry-standard implementation of this technique, which is detailed on their official page (https://graphics.pixar.com/opensubdiv/docs/intro.html).

Both NURBS and subdivision surfaces are crucial in the fields of animation, automotive design, and architectural visualization, providing tools that can create highly detailed, scalable, and realistic models.

3.3 Optimization Strategies

In the realm of 3D graphics and game development, optimization strategies are critical for enhancing performance and improving the visual quality of environments and characters. Optimization involves a variety of techniques aimed at reducing the computational load on graphics processors and improving the efficiency of the rendering process.

3.3.1 Level of Detail (LOD) Techniques

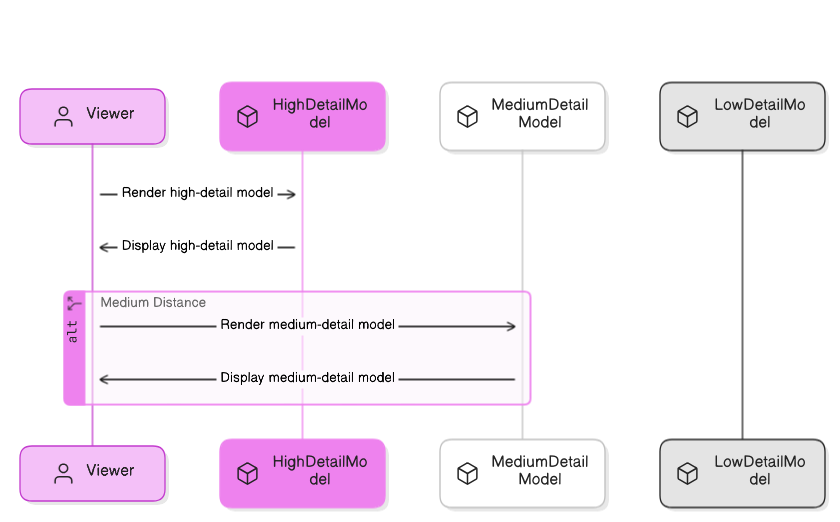

Level of Detail (LOD) is a significant optimization technique used in video games and simulations to manage and improve rendering performance. LOD involves decreasing the complexity of a 3D model based on its distance from the viewer. This technique helps in reducing the processing power required to render distant objects, which are anyway perceived with less detail, thereby improving overall frame rate and system performance. There are several approaches to LOD, ranging from using different model complexities to changing texture resolutions or using procedural generation methods.

A practical application of LOD can be seen in vast open-world games, where detailed models of characters or objects are used when close to the camera, but simpler, less resource-intensive versions are swapped in as the distance increases. This ensures that the game runs smoothly without sacrificing visual quality in the immediate vicinity of the player. Unity, a popular game development engine, provides built-in support for LOD with tutorials and documentation available on their official site (https://unity.com).

Implementing LOD requires careful planning and testing to ensure that the transitions between different levels of detail are seamless and do not distract the player. It is a balance between maintaining aesthetic quality and ensuring optimal performance, crucial for the success of any graphics-intensive application.

3.3.2 Texture and Material Optimization

Texture and material optimization in 3D modeling is crucial for creating visually appealing and performance-efficient environments, especially in the context of the Metaverse. This process involves adjusting the resolution and complexity of textures and materials used on 3D models to ensure they look realistic without consuming excessive computational resources. High-quality textures can significantly enhance the realism of a virtual environment, but they also require more memory and processing power.

One effective strategy for texture optimization is the use of MIP mapping and texture compression techniques. MIP mapping helps in reducing the load times and improving the rendering speed by using lower resolution textures when objects are far away from the viewer. Texture compression, on the other hand, reduces the file size of the textures while maintaining an acceptable level of quality. Tools like Adobe's Substance 3D offer advanced options for creating and optimizing materials and textures specifically designed for digital environments (source: Adobe).

Another aspect of material optimization is the use of physically based rendering (PBR) materials, which simulate the real-world properties of surfaces such as metals, glass, and plastics. PBR materials are essential for achieving photorealistic results in the Metaverse. They react to light in realistic ways, enhancing the immersive experience. However, they must be carefully managed to balance visual quality and performance, especially in a VR setting where frame rates are critical for user comfort.

4. Types of 3D Models Used in the Metaverse

The Metaverse utilizes a variety of 3D models to create immersive and interactive virtual worlds. These models range from simple geometric shapes to complex interactive designs that mimic the real world or imagine fantastical scenarios. The choice of model type depends on the specific requirements of the Metaverse application, including the level of interactivity, realism, and computational constraints.

Static models are commonly used for background elements that do not interact with users, such as buildings, landscapes, and non-interactive furniture. These models are simpler in terms of design and do not contain moving parts or animations, which makes them less demanding on processing power. Static models are essential for creating the foundational visual elements of a virtual world and can be highly detailed since they do not require real-time calculations for movement.

Dynamic models, in contrast, include animations and can interact with users or change in response to user actions. These models are used for characters, vehicles, and other interactive elements. Dynamic models are more complex and require advanced programming and design to ensure they can move and respond realistically within the virtual environment. The creation and implementation of dynamic models are more resource-intensive but are crucial for creating engaging and interactive experiences in the Metaverse.

Understanding the differences and applications of static and dynamic models helps developers optimize their resources and create more engaging and efficient virtual environments. For more detailed insights into the types of 3D models, Autodesk offers a comprehensive guide that could be useful (source: Autodesk).

4.1 Static vs. Dynamic Models

In the realm of 3D modeling for the Metaverse, distinguishing between static and dynamic models is fundamental. Static models are typically used to represent objects that do not change or move, such as buildings, trees, and other scenery. These models are easier to create and render because they do not require animations or complex interactions. They play a crucial role in setting the scene and providing a realistic backdrop for more interactive elements.

Dynamic models, on the other hand, are characterized by their ability to move, interact, or perform actions within the virtual environment. This category includes avatars, animated creatures, and interactive gadgets. Dynamic models are essential for creating a lively and engaging Metaverse experience, as they can respond to user inputs and simulate real-world physics. The complexity of dynamic models means they are generally more resource-intensive than static models.

The choice between using static or dynamic models often depends on the specific needs of the Metaverse application and the balance between visual quality and performance. For developers and designers, understanding when to use each type of model can significantly impact the effectiveness and efficiency of a virtual environment.

4.2 Environment vs. Character Models

In the realm of 3D modeling, there are significant distinctions between environment models and character models, each serving unique purposes and requiring different techniques and considerations. Environment models refer to the 3D representations of settings and backgrounds in which the characters interact. These can range from interiors like rooms and buildings to expansive landscapes and cityscapes. The primary focus in environment modeling is on creating a convincing, immersive setting that supports the narrative and enhances the visual experience. Techniques such as photogrammetry are often used to create highly detailed and realistic environments. More about environment modeling can be explored on websites like Autodesk’s area (https://area.autodesk.com/).

Character models, on the other hand, are the 3D representations of characters in video games, films, animations, and other media. These models are designed with a focus on articulation and movement, requiring a deep understanding of anatomy and motion to ensure they move believably. Character modeling often involves intricate detailing to capture facial expressions and body language that convey emotion and personality. Rigging, which is the process of creating the skeleton or structure that allows the character to move, is a critical step in character modeling. Tutorials and insights into character modeling can be found on platforms like CG Spectrum (https://www.cgspectrum.com/).

Both types of models play crucial roles in digital media production, but the skills and tools required for each are quite distinct. While environment models provide the stage, character models are the actors, and both need to work harmoniously to create a compelling visual narrative.

4.3 Proprietary vs. Open-Source Models

In the context of software development, particularly in 3D modeling, the debate between proprietary and open-source models is ongoing. Proprietary software models refer to commercial products developed and sold by a company. These models often come with customer support, regular updates, and a user-friendly interface, making them a preferred choice for professional studios and artists. Examples include Autodesk Maya and Adobe Photoshop. More details on proprietary software can be found on their official websites.

Open-source models, such as Blender or GIMP, are developed by a community and are free to use and modify. These models offer flexibility and no cost, which is particularly appealing to students, freelancers, and small studios. Open-source software often fosters a community where users contribute to the development, improvement, and troubleshooting of the software, which can lead to rapid innovation and customization. Insights into open-source models and their advantages can be explored further on Blender’s official site (https://www.blender.org/).

Choosing between proprietary and open-source models often depends on specific project requirements, budget, and the scale of operations. While proprietary models offer stability and comprehensive support, open-source models provide flexibility and community support. Each has its strengths and can be the right choice under different circumstances.

5. Benefits of High-Performance 3D Models

High-performance 3D models are crucial in various fields such as gaming, film production, virtual reality, and scientific visualization. These models are highly detailed and optimized for performance, ensuring that they look realistic and function efficiently within software applications. One of the primary benefits of high-performance 3D models is their ability to enhance visual fidelity. This means that the models have high-resolution textures and complex geometries that can make digital scenes nearly indistinguishable from real life. This level of detail is particularly important in industries like film and video games, where visual realism can significantly impact user experience.

Another benefit is improved efficiency in workflow. High-performance models are optimized to work well within the limits of rendering software and hardware, helping to prevent bottlenecks in the production process. This optimization can lead to faster rendering times, which is crucial in industries where time is a critical factor. Additionally, these models are often designed to be scalable, meaning they can be adjusted for different levels of performance, which can be particularly useful for applications across various platforms.

Lastly, high-performance 3D models can lead to better interactivity in applications such as virtual reality (VR) and augmented reality (AR). These models are designed to react in real-time to user inputs, which can make VR and AR experiences more immersive and responsive. The benefits of using high-performance 3D models in VR can be further understood through resources available on sites like Road to VR (https://www.roadtovr.com/).

In conclusion, high-performance 3D models are invaluable in modern digital productions, offering enhanced realism, efficiency, and interactivity, which are essential for meeting the high expectations of today's media consumers.

5.1 Enhanced User Experience

The evolution of technology has significantly improved user experiences across various digital platforms. Enhanced user experience (UX) is pivotal in maintaining user engagement and satisfaction. This is particularly evident in the realms of web design, software applications, and interactive media. A well-designed user interface (UI) that is intuitive and responsive can drastically improve the overall UX, making applications easier to navigate and understand.

For instance, advancements in UX design have led to the development of more adaptive and user-friendly websites that are optimized for various devices, including mobile phones and tablets. This adaptability ensures that all users have a seamless experience, regardless of the device they are using. Websites like Smashing Magazine often discuss the importance of responsive design and its impact on user experience (https://www.smashingmagazine.com/).

Moreover, the integration of artificial intelligence and machine learning has allowed for more personalized experiences. Users can receive recommendations and content tailored specifically to their preferences and behaviors, enhancing their interaction with the platform. This level of personalization not only improves engagement but also increases the likelihood of user retention.

5.2 Improved Realism and Engagement

The integration of advanced graphics and computing power has dramatically enhanced the realism and engagement in video games and virtual reality (VR) environments. This improvement is crucial for creating immersive experiences that captivate users and maintain their interest over time. Enhanced graphics render more detailed and realistic environments, making the virtual world more believable and engaging.

For example, the gaming industry has seen significant advancements in realism with games like "The Last of Us Part II," which uses complex facial animations and environmental interactions to create a deeply engaging narrative and gameplay experience. Articles on platforms like IGN often explore how these technological advancements are transforming the gaming industry (https://www.ign.com/).

In VR, increased realism can lead to more effective training and educational applications, providing users with experiences that closely mimic real-world scenarios. This has been particularly beneficial in fields like medicine and aviation, where practicing procedures in a risk-free environment can be crucial. The potential of VR in professional training is discussed extensively on websites such as Virtual Reality Society (https://www.vrs.org.uk/).

5.3 Scalability in Complex Scenes

Scalability in complex scenes is a critical aspect of software and hardware performance, particularly in industries like animation and visual effects. As scenes become more detailed and populated with elements, the ability to scale processing power and manage large datasets efficiently becomes essential. This scalability ensures that the final output maintains high quality without compromising on speed or performance.

In the context of computer graphics, technologies such as NVIDIA’s RTX graphics cards have revolutionized the ability to handle complex scenes with real-time ray tracing capabilities. This technology allows for more realistic lighting and shadows in real-time applications, which is crucial for both game developers and filmmakers. More information on the impact of RTX technology can be found on NVIDIA’s official website (https://www.nvidia.com/).

Furthermore, cloud computing has also played a significant role in enhancing scalability. By leveraging cloud resources, companies can manage large datasets and computing tasks more effectively, allowing for smoother scalability in complex scenes. This is particularly useful in industries where rendering times and processing capabilities are critical, such as in animated feature films. Cloud technology and its impact on scalability are often featured in tech publications like TechCrunch (https://techcrunch.com/).

Each of these advancements plays a crucial role in pushing the boundaries of what is possible in digital environments, enhancing both the creator's and the end-user's experience.

6. Challenges in Creating 3D Models for the Metaverse

Creating 3D models for the Metaverse presents a unique set of challenges that developers and designers must navigate. As the Metaverse aims to be an immersive, interactive, and hyper-realistic environment, the creation of 3D models for this digital universe requires careful consideration of various technical and aesthetic factors.

6.1 Balancing Performance and Quality

One of the primary challenges in creating 3D models for the Metaverse is balancing performance and quality. High-quality 3D models are crucial for creating a realistic and engaging experience, but these models often require a significant amount of computational power and resources. This can lead to slower load times and reduced performance, particularly on lower-end devices.

To address this issue, developers must optimize their 3D models to ensure they are as lightweight as possible without compromising on visual quality. Techniques such as polygon reduction, level of detail (LOD) adjustments, and efficient texturing are commonly used to reduce the impact on performance. Additionally, developers must also consider the hardware capabilities of different devices to ensure that all users have a smooth and enjoyable experience in the Metaverse.

For more insights on balancing performance and quality in 3D modeling, you can visit Autodesk's official blog.

6.2 Cross-Platform Compatibility

Another significant challenge in creating 3D models for the Metaverse is ensuring cross-platform compatibility. The Metaverse is accessed through various devices, including VR headsets, smartphones, tablets, and PCs, each with different operating systems and hardware specifications. This diversity requires models to be adaptable and functional across all platforms to provide a seamless user experience.

Developers must use universal file formats and consider the specific requirements and limitations of each platform when designing 3D models. Tools like Unity or Unreal Engine can help create models that are compatible across different platforms. Additionally, testing on multiple devices is crucial to identify and fix any issues related to compatibility before the models are deployed in the Metaverse.

By overcoming these challenges, developers can create 3D models that not only enhance the visual appeal of the Metaverse but also ensure a consistent and accessible experience for all users.

6.3 Intellectual Property Issues

Intellectual property (IP) issues are a significant concern in the realm of 3D modeling, especially as these creations become integral to digital environments like the Metaverse. As creators develop unique digital assets, the need to protect these assets from unauthorized use or reproduction becomes crucial. The challenge lies in the digital nature of these assets, which can be easily copied and distributed without significant degradation in quality.

One of the primary concerns is the establishment of ownership. Unlike physical creations, digital models are easy to replicate and share, making it difficult to track the original source. This has led to numerous legal battles over the ownership of digital content. For instance, in the gaming industry, developers often face issues when their designs are used without permission in other games or media. Websites like the World Intellectual Property Organization provide guidelines and resources for protecting digital creations, which are essential for creators in the Metaverse (source: WIPO).

Moreover, the implementation of blockchain technology is seen as a potential solution for these IP issues. By creating a decentralized ledger of ownership, blockchain can provide a transparent and immutable record of asset creation and distribution. This technology not only helps in establishing and proving ownership but also in managing and enforcing IP rights in a way that is fitting for the digital nature of the Metaverse. For more detailed insights, articles on platforms like Forbes often discuss the intersection of blockchain and IP rights.

7. Future Trends in 3D Modeling for the Metaverse

The future of 3D modeling in the Metaverse is poised for transformative growth, driven by technological advancements and increasing integration of virtual spaces in everyday activities. As the Metaverse evolves, the demand for more sophisticated and realistic 3D models is expected to skyrocket, necessitating innovations in the tools and technologies used for 3D modeling.

One of the key trends is the move towards more immersive and interactive models. This involves not just visual fidelity but also models that can interact in real-time with users and other models in a virtual environment. The integration of sensor and motion capture technology allows for models that can mimic real-world physics and human gestures, enhancing the user experience in the Metaverse. For a deeper understanding of how these technologies are evolving, Engadget often covers the latest advancements in tech that could impact 3D modeling (source: Engadget).

Additionally, the rise of cloud computing is enabling more complex and resource-intensive modeling processes. This technology allows for the handling of large datasets and complex rendering tasks that were previously not possible on individual workstations. As cloud technology continues to advance, it will likely become a fundamental part of 3D modeling workflows, providing the necessary power and scalability required for creating detailed and complex models for the Metaverse.

7.1 Advances in AI-Driven Modeling Tools

Artificial Intelligence (AI) is set to revolutionize the field of 3D modeling, particularly with the development of AI-driven tools that can automate and enhance various aspects of the modeling process. These tools leverage machine learning algorithms to understand and replicate complex patterns and structures, which can significantly reduce the time and effort required to create detailed 3D models.

One of the most exciting advancements is in generative design, where AI algorithms can propose optimizations and variations to a model based on specific parameters set by the creator. This not only speeds up the design process but also enables the exploration of new and innovative design possibilities that may not have been considered before. Autodesk, a leader in 3D design software, is at the forefront of integrating AI into their tools, providing a glimpse into the future of automated and intelligent modeling (source: Autodesk).

Furthermore, AI is also improving the realism of 3D models through better texturing and lighting techniques. AI-driven tools can analyze real-world images and videos to create highly realistic textures and lighting environments, making the models look more lifelike than ever. This enhancement is crucial for creating engaging and believable environments in the Metaverse, where user immersion is key.

Overall, the integration of AI into 3D modeling tools is not just about automation but also about enhancing creativity and efficiency, paving the way for more dynamic and complex creations in the Metaverse.

7.2 Integration of Real-World Data through Photogrammetry

Photogrammetry is a technique that has revolutionized the way real-world data is integrated into various fields, particularly in video games and virtual reality. This process involves capturing photographs from the real world and using them to create accurate 3D models. These models can then be used in digital environments, providing a level of realism and detail that was previously difficult to achieve. For instance, game developers use photogrammetry to create detailed and immersive environments that enhance the gaming experience by making it more engaging and realistic.

One of the key benefits of photogrammetry is its ability to produce highly detailed and accurate representations of physical objects and landscapes. This is particularly useful in industries like archaeology and architecture, where precise, detailed representations are crucial. The technology is not only limited to large-scale projects; it is also accessible to smaller developers thanks to advancements in software and camera technology. Websites like Sketchfab offer numerous examples of photogrammetry in action, showcasing how this technology is applied across different sectors (source: Sketchfab).

Moreover, the integration of photogrammetry in projects can significantly reduce the time and cost associated with creating 3D models from scratch. It allows for the rapid prototyping and testing of environments, which is a boon for project timelines and budgets. The technology's growing accessibility and ease of use continue to drive its popularity across various industries, making it a staple in the toolkit of many professionals today.

7.3 Increasing Use of Procedural Generation Techniques

Procedural generation refers to the method of creating data algorithmically as opposed to manually. In the realm of video games and simulations, this technique is used to generate vast amounts of content automatically, which can lead to more varied and dynamic environments. This approach not only saves significant amounts of development time but also allows for the creation of large, expansive worlds that would be impractical to craft by hand.

The use of procedural generation can be seen in games like "Minecraft" and "No Man's Sky," where each player's experience is unique thanks to the algorithmically generated environments and ecosystems. These games demonstrate the potential of procedural generation to create endless possibilities for exploration and interaction, which keeps the gameplay fresh and engaging for users. More information on how these games utilize procedural generation can be found on their official websites (source: Minecraft, No Man's Sky).

Furthermore, procedural generation is not just limited to landscapes and environments. It is also used for generating textures, weather patterns, and even story elements in games. This versatility makes it an invaluable tool in the development of interactive media, where variability can greatly enhance the user's experience. As technology advances, the algorithms behind procedural generation continue to improve, leading to even more sophisticated and realistic outputs.

8. Real-World Examples

Real-world examples of the integration of advanced technologies in everyday applications are numerous and demonstrate the profound impact of these innovations. For instance, Google Earth uses satellite imagery and photogrammetry to provide detailed and accurate representations of the Earth’s surface. This tool has become indispensable for professionals in fields such as geography, urban planning, and education, offering an easy-to-use platform for exploring and analyzing our planet (source: Google Earth).

Another example is the use of AI in healthcare, where machine learning algorithms are used to predict patient outcomes, personalize treatments, and streamline operations. Companies like IBM and Google are at the forefront of integrating AI into healthcare, with systems that can analyze complex medical data and assist in diagnostic processes (source: IBM Watson Health, Google Health).

These examples highlight the versatility and transformative potential of modern technologies. Whether improving the accuracy of world maps or enhancing the efficiency of healthcare systems, these technologies are making significant contributions across various sectors, reshaping how we interact with the world and each other.

8.1 Case Studies of Successful 3D Models in Popular Metaverses

The integration of 3D models in metaverses has revolutionized virtual environments, offering immersive experiences that mimic real-world interactions. One notable example is the virtual reality platform, Second Life, where users interact through avatars in a fully user-generated world. These avatars and the environment are sophisticated 3D models that have been refined over the years to provide realistic interactions and aesthetics. More on Second Life’s approach to 3D modeling can be found on their official website.

Another significant case study is the Sandbox, a blockchain-based metaverse that leverages user-generated content to create a vast, interactive world. Here, 3D models are not just visual elements but also integral to gameplay and economics, as users can create, own, and monetize their creations. The Sandbox’s unique approach to 3D modeling emphasizes user empowerment and economic opportunity, making it a pioneering model in the metaverse space. For more details, visit the Sandbox website.

Lastly, Fortnite has also made remarkable strides with its Party Royale mode, where the game shifts focus from battle royale to a social hub. This mode features concerts, social gatherings, and more, all within intricately designed 3D spaces that enhance user engagement and interaction. Fortnite’s use of 3D models in Party Royale demonstrates how dynamic and versatile these models can be in creating engaging user experiences. Explore more about Fortnite’s 3D modeling on their community page.

8.2 Comparative Analysis of Different Modeling Approaches

3D modeling techniques vary widely across different platforms and purposes, each with its own set of tools, workflows, and outcomes. Polygonal modeling, for instance, is widely used for creating video game assets due to its precise control over surfaces and vertices. This method is prevalent in platforms like Unity or Unreal Engine, which are standard in the gaming industry for their robust, high-fidelity graphics capabilities. More insights into polygonal modeling can be found through various online tutorials and platform documentation.

In contrast, NURBS (Non-Uniform Rational B-Splines) modeling is favored in industries where precision is crucial, such as automotive and aerospace engineering. NURBS provides high levels of accuracy in creating complex, smoothly curved surfaces. This approach is less common in the gaming industry but is integral to CAD software used in engineering and product design. For a deeper understanding of NURBS, engineering-focused resources and software documentation can provide comprehensive guides.

Lastly, digital sculpting has become a game-changer in the film and animation industry, allowing for intuitive, artistic control over 3D models. Tools like ZBrush or Mudbox enable artists to sculpt detailed characters and creatures as if they were working with real clay. This method is particularly useful for creating highly detailed and expressive models that can be used in films, animations, and high-end video games. To compare these tools and their applications, visiting forums and community discussions can offer real-world insights and comparisons.

9. In-depth Explanations

In-depth explanations of complex subjects require a structured approach to ensure clarity and comprehensibility. For instance, when explaining advanced scientific concepts, breaking down the information into manageable parts and using analogies can greatly aid in understanding. Websites like Khan Academy or educational YouTube channels frequently use this method to teach a wide range of topics effectively.

In the context of technology, explaining software development processes or the architecture of a system often involves diagrams and step-by-step breakdowns. Sites like Medium or various tech blogs provide extensive articles that delve into these topics, offering both high-level overviews and detailed walkthroughs.

Furthermore, in the field of economics, concepts such as market dynamics or fiscal policies can be intricate. Economists and educators might use case studies or historical data to illustrate these concepts, making them more relatable and easier to understand. Financial education platforms like Investopedia are excellent resources for finding in-depth explanations that are both accurate and accessible to a general audience.

9.1 Technical Breakdown of 3D Modeling Software

3D modeling software is a critical tool in the fields of animation, engineering, and architecture, enabling the creation of detailed three-dimensional objects and environments. These software programs vary widely in their capabilities, interfaces, and intended uses, but they all share a few core functionalities. Most 3D modeling software, such as Autodesk Maya, Blender, or SketchUp, provide tools for modeling, texturing, rigging, and rendering. /n

Modeling tools are perhaps the most fundamental aspect of these programs, allowing users to create complex geometrical shapes. These tools can include features for sculpting, box modeling, and parametric modeling, each suited to different types of projects and skill levels. Texturing brings these models to life, adding surface details that enhance realism or artistic effect. Rigging tools are used in character animation to create a skeleton-like structure that animates the model. Lastly, rendering is the process by which the software generates the final image or animation from the completed 3D model, incorporating light, shadow, and optical effects to achieve a high level of realism or the desired artistic style. /n

For a deeper dive into the technical aspects of 3D modeling software, you can visit Autodesk’s official page (

9.2 Step-by-Step Guide to Model Optimization

Model optimization in 3D modeling is crucial for maintaining efficiency and performance, especially in video game design and real-time applications. The process involves reducing the polygon count of a model while preserving as much of the original detail and visual fidelity as possible. This can be achieved through various techniques such as mesh simplification, retopology, and the use of normal maps to simulate high-resolution details on lower-polygon surfaces. /n

The first step in model optimization is evaluating the model’s complexity and identifying areas where reductions can be made without significantly impacting the visual quality. Tools like MeshLab or Blender offer functionalities to analyze and reduce polygon counts effectively. The next step involves retopology, which is the process of re-creating the model’s mesh with a focus on maintaining a clean topology with fewer polygons. This step is crucial for animation and game assets, as it ensures that the mesh deforms properly. /n

Finally, the use of texturing techniques like baking high-resolution details into normal maps allows the model to maintain the illusion of complexity without the computational overhead. This is particularly useful in game development, where performance is paramount. For a comprehensive guide on model optimization, CG Cookie provides extensive tutorials and resources that can be accessed here (

10. Comparisons & Contrasts

When comparing 3D modeling software, it's important to consider several factors such as cost, usability, feature set, and the specific needs of the project or industry. For instance, Autodesk Maya is widely used in the film and video games industry for its robust set of features that cater to both modeling and animation. It is, however, quite expensive, making it less accessible for hobbyists or small studios. On the other hand, Blender is a powerful open-source alternative that offers a comprehensive suite of tools for modeling, rendering, and animation without the cost barrier. /n

In terms of usability, software like SketchUp is favored in architectural modeling for its intuitive design and ease of use, making it ideal for beginners and professionals who need to quickly draft designs. Conversely, software like ZBrush excels in sculptural modeling and digital art, providing advanced tools tailored for detailed and complex organic shapes, which might be overkill for someone needing to model simple, hard-surface objects. /n

Each software also has its own learning curve, community support, and compatibility with other tools, which are crucial factors to consider. For a detailed comparison of popular 3D modeling software, Digital Arts Online offers insights and reviews that can help in making an informed decision (

10.1 Comparing Various 3D Modeling Tools and Their Suitability for Metaverse Development

When it comes to developing for the Metaverse, the choice of 3D modeling tools is crucial as it impacts not only the visual quality but also the functionality and user interaction within the virtual environment. Among the plethora of available tools, three stand out for their specific features and compatibility with Metaverse development: Blender, Autodesk Maya, and Unity.

Blender is a powerful, open-source software offering a comprehensive suite of tools for modeling, animation, and rendering. It is particularly favored for its no-cost access and a strong community of users. Blender is highly adaptable for Metaverse projects due to its robust support for VR formats and real-time rendering capabilities. More about Blender can be explored on its official site Blender.

Autodesk Maya is another heavyweight in the realm of 3D modeling, known for its highly sophisticated modeling tools and fine control over animations. It is ideal for developers looking to create intricate and detailed environments or characters. Maya integrates well with Unity, another popular engine for Metaverse development, allowing for seamless asset transfer and management.

Unity, while primarily a game engine, includes powerful 3D modeling capabilities and is particularly noted for its ease of use in creating interactive experiences. It supports a wide range of VR and AR development requirements, making it a versatile tool for Metaverse applications. Unity’s asset store also provides numerous ready-made models and environments that can be customized for rapid development.

Each tool has its strengths and is suitable for different aspects of Metaverse development, depending on the project's specific needs and the developer's expertise.

10.2 Contrasting Traditional 3D Modeling with Metaverse-Specific Challenges

Traditional 3D modeling and Metaverse development share foundational techniques and tools, but the latter introduces unique challenges that require specialized solutions. Traditional 3D modeling focuses on creating digital content for media such as games, films, and visualizations which are primarily designed for non-interactive, linear experiences. In contrast, Metaverse development demands not only visual realism but also optimization for real-time interaction and scalability to support potentially thousands of simultaneous users.

One of the primary challenges in Metaverse-specific modeling is creating environments that are not only visually appealing but also highly optimized for performance. This involves using techniques like LOD (Level of Detail) management, which ensures that the graphical complexity of objects is adjusted based on the viewer's distance to maintain performance without sacrificing visual quality. More insights into LOD can be found in various development forums and documentation resources online.

Another challenge is the integration of interactive elements, which requires both programming skills and an understanding of user experience design. Unlike traditional models, Metaverse assets often need to include interactive components, such as doors that open or items that can be picked up by users. This interactivity introduces a layer of complexity in both the design and testing phases.

Finally, ensuring that 3D models are compatible across different platforms and devices is crucial in Metaverse development. This interoperability is less of a concern in traditional 3D modeling, where outputs are usually intended for specific media formats. Developers must employ cross-platform compatible tools and test extensively to ensure a seamless experience across all user entry points.

11. Why Choose Rapid Innovation for Implementation and Development

In the fast-evolving tech landscape, rapid innovation is key to staying competitive and relevant. This approach is particularly beneficial in the fields of implementation and development, where the speed of technology adoption can significantly influence market success. Rapid innovation allows companies to quickly test and refine ideas, reducing the time from concept to market and enabling a more agile response to user feedback and changing market conditions.

Adopting a rapid innovation strategy can lead to significant competitive advantages. It enables businesses to capitalize on emerging technologies before they become mainstream, providing a first-mover advantage in new markets. Additionally, it fosters a culture of experimentation and learning, crucial for continuous improvement and adaptation in a technology-driven marketplace.

Moreover, rapid innovation is essential for meeting the increasing consumer expectations for personalized and continually improving products and services. By shortening development cycles, companies can more promptly address customer needs and incorporate feedback into subsequent iterations or products. This iterative process is vital for maintaining user engagement and satisfaction in a market where consumer preferences evolve rapidly.

In conclusion, choosing rapid innovation in implementation and development not only accelerates growth and adaptation but also enhances a company’s ability to innovate in response to technological advancements and market demands. This approach is particularly relevant in technology sectors, where the pace of change is relentless and the rewards for early adoption are substantial.

11.1 Expertise in AI and Blockchain Integration

The integration of Artificial Intelligence (AI) and Blockchain technology is revolutionizing various industries by enhancing security, efficiency, and automation. Companies specializing in this integration are increasingly sought after for their expertise in creating robust solutions that leverage the strengths of both technologies. AI's capabilities in data analysis, pattern recognition, and automation complement Blockchain's strengths in security, transparency, and decentralized control.

For instance, in the financial sector, AI can analyze vast amounts of transaction data to detect fraud patterns, while Blockchain can secure transactions and ensure transparency and traceability. This synergy not only improves operational efficiencies but also builds trust among users. Moreover, in supply chain management, AI can optimize logistics and inventory predictions, and Blockchain can provide immutable records of the goods' journey, enhancing the overall supply chain transparency and efficiency.

Further reading on the integration of AI and Blockchain can be found on IBM’s insights (IBM Blockchain).

11.2 Customized Solutions for Diverse Metaverse Projects

As the concept of the Metaverse gains traction, the demand for customized solutions that cater to diverse virtual environments is on the rise. Companies that specialize in Metaverse development are focusing on creating unique user experiences that are tailored to specific audience needs and industry requirements. These solutions range from virtual real estate platforms to immersive educational programs and interactive entertainment environments.

Customization in the Metaverse allows for the creation of highly engaging and interactive platforms where users can interact, learn, work, and play in ways that mimic real-world interactions but with the added benefits of digital enhancements. For example, in education, customized Metaverse solutions can create interactive and immersive learning experiences that are not possible in traditional educational settings.

To explore more about customized solutions in the Metaverse, check out articles from Wired (Wired Metaverse) and VentureBeat (VentureBeat Metaverse).

11.3 Commitment to Cutting-Edge Technologies and Scalable Models

In today's rapidly evolving tech landscape, a commitment to cutting-edge technologies and scalable models is crucial for businesses aiming to maintain a competitive edge. Companies that prioritize innovation are better positioned to adapt to changes and scale operations efficiently. This commitment involves not only adopting new technologies but also fostering a culture of continuous learning and improvement.

Scalable models in technology provide the flexibility to grow and expand without significant overhauls, thereby reducing costs and increasing efficiency. For example, cloud computing services offer scalable IT infrastructure that businesses can use to increase or decrease resources according to demand. Similarly, adopting scalable blockchain models can help businesses handle growing amounts of transactions without compromising on speed or security.

For more insights into scalable models and cutting-edge technologies, consider reading resources from TechCrunch (TechCrunch Innovation).

12. Conclusion

12.1 Recap of Key Points

In the preceding discussion, we explored the multifaceted world of 3D modeling, highlighting its evolution, current applications, and the technological advancements that have shaped its progress. We delved into various software tools that have become industry standards, such as Autodesk Maya, Blender, and SketchUp, each offering unique features tailored to different aspects of 3D modeling, from animation to architectural design. For a deeper understanding of these tools, you can visit their official websites or educational platforms that offer tutorials and user guides.

The integration of 3D modeling across diverse sectors such as entertainment, manufacturing, and healthcare was also examined, illustrating its critical role in product design, digital content creation, and even in surgical planning and simulation. The impact of emerging technologies like virtual reality (VR) and artificial intelligence (AI) in enhancing the capabilities and efficiency of 3D modeling processes was another significant point of discussion. These technologies not only streamline complex modeling tasks but also open new avenues for creativity and innovation in design.

12.2 Encouragement to Embrace Future Technologies in 3D Modeling

As we look to the future, the trajectory of 3D modeling is set to ascend with even greater momentum. The ongoing advancements in AI and machine learning are poised to revolutionize this field by automating routine tasks, improving precision, and reducing the time required for project completions. For those interested in staying ahead in the field, keeping abreast of these technological developments is crucial. Websites like TechCrunch and Wired often feature articles on the latest trends in technology that could be immensely beneficial.

Moreover, the increasing accessibility of sophisticated 3D modeling tools and technologies invites professionals and hobbyists alike to explore and adopt these innovations. Whether it's enhancing realism in digital artworks or improving the accuracy of technical simulations, the potential applications are boundless. Embracing these technologies not only aids in personal and professional growth but also contributes to the advancement of the industry as a whole.

In conclusion, the dynamic landscape of 3D modeling offers a promising arena for both current practitioners and newcomers. By continuously learning and integrating the latest technologies into your workflow, you can significantly enhance your skill set, increase your marketability, and contribute to pushing the boundaries of what is possible in 3D modeling.